The Ethical Imperative in AI Data Extraction

The rapid evolution of AI data extraction technology has transformed how organizations collect, process, and leverage information from documents and digital sources. For businesses implementing intelligent document processing, ethical considerations aren’t just compliance checkboxes but critical foundations for building trustworthy AI systems that deliver sustained value.

Organizations that prioritize ethical AI governance see higher stakeholder trust and better long-term outcomes from their AI investments. In late 2023, The White House issued an executive order to help ensure AI safety and security. This comprehensive strategy provides a framework for establishing new standards to manage the risks inherent in AI technology. As regulatory landscapes evolve with frameworks like the EU AI Act and similar legislation developing globally, businesses must move beyond compliance to embrace comprehensive ethical frameworks.

Core Ethical Challenges in AI-Driven Data Extraction

Data Privacy and Informed Consent

For organizations implementing AI-driven data extraction, privacy protection represents both a legal requirement and an ethical imperative. Effective privacy safeguards include:

- Data minimization principles that limit collection to necessary information

- Purpose limitation that clearly defines how extracted data will be used

- Advanced anonymization techniques that prevent re-identification while maintaining data utility

- On-device processing capabilities that minimize data transmission risks

The concept of informed consent becomes particularly complex with AI systems that continuously evolve in their capabilities and applications. Ethical approaches require:

- Transparent communication about data extraction purposes in accessible language

- Clear disclosure of AI involvement in data processing

- Meaningful options for controlling data usage

- Mechanisms for modifying or withdrawing consent as circumstances change

Bias and Fairness in Data Collection

AI systems inevitably reflect the data upon which they are trained. When document extraction processes systematically underrepresent certain populations or incorporate historical biases, the resulting systems perpetuate and potentially amplify these inequities.

Financial services organizations implementing invoice processing systems, for example, might inadvertently build models that perform differently across vendor demographics if their training data lacks diversity. A 2024 study published in the World Journal of Advanced Research and Reviews analyzes the risks of AI reinforcing systemic inequities in financial decision-making and states that “biases in training data, model design, and deployment processes can lead to discriminatory outcomes.” Research shows that algorithmic bias in financial document processing can lead to significant disparities in processing times and approval rates. Technical approaches to mitigate bias include:

- Diverse training datasets that represent various document formats, languages, and sources

- Regular algorithmic audits to detect and mitigate potential bias

- Cross-disciplinary review teams that combine technical expertise with domain knowledge

- Continuous monitoring for performance disparities across different groups

Transparency and Explainability

As AI extraction models become more complex, their internal decision-making mechanisms often become increasingly opaque. This “black box” problem creates significant challenges for accountability and trust.

Organizations implementing responsible AI data extraction should prioritize:

- Explainable AI approaches that provide insights into extraction decisions

- Confidence scores that communicate certainty levels for extracted information

- Audit trails documenting how data was processed

- Human oversight for critical decisions based on extracted data

A 2024 study referenced in an SSRN paper found that “transparently displaying these criteria on the label led to even higher levels of trust in the AI system. Furthermore, ‘Transparency’ itself was identified as the most crucial requirement for fostering trust in AI.

The Regulatory Landscape for AI Data Extraction

The regulatory environment governing AI data extraction continues to evolve globally:

- The EU AI Act classifies document extraction systems based on risk levels and imposes graduated requirements

- The California Consumer Privacy Act (CCPA) and similar state laws establish consent and transparency requirements

- GDPR mandates data minimization, purpose limitation, and various data subject rights

- Industry-specific regulations in finance, healthcare, and other sectors impose additional compliance obligations

Legal experts anticipate increased regulatory activity in this space through 2025 and beyond.

Organizations should develop comprehensive compliance programs that:

- Monitor regulatory developments across relevant jurisdictions

- Translate legal requirements into technical and operational procedures

- Document compliance efforts through comprehensive record-keeping

- Engage proactively with regulators to help shape evolving standards

Building an Effective AI Governance Framework

Organizational Structure for AI Governance

Effective governance starts with clear organizational structures that establish accountability for ethical AI data extraction:

- Executive-level ownership of AI ethics and governance

- Cross-functional AI ethics committee with representation from technical, legal, compliance, and business teams

- Dedicated AI ethics specialists who can evaluate data extraction systems

- Frontline oversight by teams directly implementing AI extraction solutions

For enterprise organizations, this might mean establishing a dedicated AI Ethics Office reporting directly to the C-suite, while smaller companies might designate ethics responsibilities within existing governance structures.

Risk Assessment and Management

A comprehensive risk framework for AI data extraction should address:

- Privacy risks including potential data breaches or unintended disclosures

- Bias and fairness risks that could affect different stakeholder groups

- Transparency gaps that might undermine trust or accountability

- Regulatory compliance risks across relevant jurisdictions

This assessment process should occur throughout the AI lifecycle, from initial data collection through model development, deployment, and ongoing operations.

Policies and Standards

Organizations should develop clear policies specifically addressing:

- Ethical data collection and consent procedures

- Data quality and representativeness requirements

- Model documentation and explainability standards

- Testing and validation processes before deployment

- Ongoing monitoring and audit requirements

- Incident response procedures for ethical concerns

These policies should be living documents, regularly updated to reflect evolving capabilities, risks, and best practices. Industry frameworks like the NIST AI Risk Management Framework provide valuable guidance for developing these policies.

Implementing Ethical AI Data Extraction: A Practical Approach

Security as the Foundation

Ethical AI extraction begins with robust security measures that protect sensitive information throughout its lifecycle:

- Bank-grade encryption for data in transit and at rest

- SOC 2 Type 2 certification ensuring organizational security controls

- Automated processing pipelines that minimize human access to sensitive data

- Granular access controls limiting data visibility to essential personnel

Security experts recommend these measures as the minimum baseline for organizations handling sensitive documents through AI systems.

Privacy-Preserving Document Processing

Advanced privacy-preserving approaches to document extraction include:

- On-device processing that keeps sensitive data local whenever possible

- Federated learning that trains models across distributed data without centralizing it

- Differential privacy techniques that add carefully calibrated noise to prevent individual identification

- Data retention policies that minimize storage duration for sensitive information

Recent advances in privacy-preserving machine learning have made these techniques increasingly practical for production systems.

Responsible AI Development Practices

Organizations implementing AI extraction should adopt development practices that embed ethics throughout the process:

- Diverse, representative data collection that reflects the full spectrum of documents the system will process

- Rigorous testing across different demographics and document types

- Regular bias audits examining performance variations

- Documentation of model limitations and appropriate use cases

- Clear processes for handling edge cases and exceptions

Continuous Monitoring and Improvement

Ethical AI governance requires ongoing vigilance through:

- Performance dashboards tracking accuracy across different document types and sources

- Regular fairness assessments examining output variations across demographic groups

- User feedback channels capturing concerns about system behavior

- Periodic third-party audits providing independent evaluation

Veryfi’s Approach to Ethical AI Data Extraction

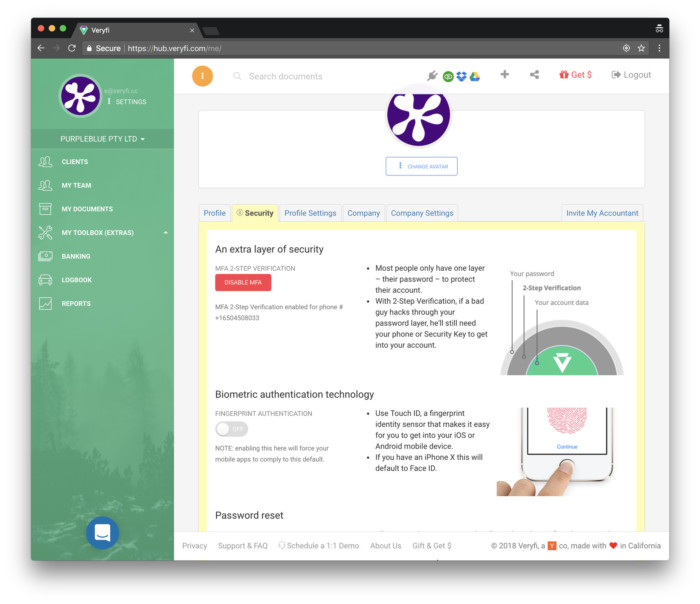

At Veryfi, ethical principles guide our approach to AI-powered document processing:

- 100% automated extraction removes humans from the process, enhancing privacy

- Bank-grade encryption and SOC 2 Type 2 certification ensure data security

- Compliance with CCPA, HIPAA, GDPR, and PIPEDA across jurisdictions

- On-device processing with Veryfi Lens keeps sensitive data local

- User data control ensures information remains within organizational boundaries

- Zero data sharing policies protect confidential information

- Fraud detection capabilities identify manipulated documents using various signals like visuals, OCR, warnings, and device ID.

Learn more about Veryfi’s commitment to data privacy and security on our dedicated resources page.

Conclusion: The Path Forward

The ethical considerations surrounding AI-driven data extraction represent fundamental challenges that will shape the future development and societal impact of this technology. Organizations implementing document extraction solutions must develop integrated approaches that combine:

- Technical safeguards for privacy and security

- Organizational policies and governance structures

- Cultural commitments to responsible innovation

- Proactive regulatory engagement and compliance

By embracing comprehensive ethical frameworks that center human rights, dignity, and fairness, organizations can harness the transformative potential of AI-driven data extraction while building sustainable trust with customers, employees, and society.